athat all during the Artificial neural network (ANN) is a computing system that emulates the way biological neural networks function in humans. Sometimes simply called neural networks, ANNs are used to train AI systems to think and behave like humans.

In a previous blog on what is artificial intelligence I have already discussed how AI systems are way behind human intelligence. This is because copying the biological neural networks is not so simple. But computer scientists have been taking a stab at it for the past seven decades and found success to some extent too.

In this blog we will be talking about how ANNs are built.

But before that we need to understand how biological neural networks work. Here is a very brief overview.

What are biological neural networks

The biological neural system is responsible for transmitting information within our body via a network of cells called neurons. An adult body has close to 100 billion neurons communicating through electrical signals and chemical messengers.

If you jerk your hand the moment you touch a hot iron, that’s neurons at work.

If you cry out in pain when something sharp pierces your foot while walking, that’s neurons at work.

If you give a whoop of joy at seeing your favourite player score a goal, that’s again due to the neural network in your body.

Neurons are connected to each other as well as to tissues, so that they can receive and transmit information about everything happening around and with us to the brain.

Say you are walking down the road during the rainy season and encounter a big muddy patch. The neural network transmits all the information to that the eye scans, such as size, depth, water colour, any path inside the puddle, surroundings, etc. to the brain. The brain anaylses all the information received by dipping into past encounters and decides the best path to take and transmits this information back via the neural network to the right body parts.

That’s how your hands hitch the pants and then you take long strides from the right side of the puddle.

For any event, each neuron receives information through chemical signals or chemical messengers from multiple neurons. When the sum total of signals reaches a threshold value, only then the neuron fires up and passes the information onward to other neurons.

That’s how you react differently to a stone, a pin or a leaf touching your skin. Sometimes you cry out in pain, at others you just brush off the skin and move on.

If you want to know more about neurons and how they work, read about them here, here and here.

Now that you understand on a very high level how neurons and neural networks work, let’s dive into how scientists have been trying to emulate the biological neural networks through artificial neural networks.

From now on, when I say neural network or neural net, I mean artificial neural network.

History of artificial neural networks

In 1959, Bernard Widrow and Marcian Hoff of Stanford University developed two models called ADALINE (ADAptive LINear Elements) and MADALINE (Multiple ADAptive LINear Elements). ADALINE could read binary bits from telephone lines and predict the next bit.

MADALINE was initially used to eliminate echo on the telephone line. It was the first neural network to be used to solve a real world problem and is still in use.

Multiple perceptrons

In 1962 they developed a learning procedure that came to be called the Widrow-Hoff rule. The rule said that a single perceptron may induce a big error in the output, but if the error is distributed to other adjacent perceptrons, the overall error in the neural network can be decreased.

Perceptron is the mathematical model of a biological neuron in an artificial neural network.

mathematically 20202020Each perceptron runs an algorithm to emulate the working of a single biological neuron. Theoretically, any error can be eliminated by correct distribution of error over all the perceptrons in a layer but practically it was difficult to implement due to lack of processing power.

So, von Neumann architecture took over the computing scene and neural networks had to take a back seat. This also happened because of continued discussion around whether “thinking machines” were desirable or not.

A debate that continues to this day.

The first multilayered network was developed in 1975, which was an unsupervised network. We will see what are unsupervised neural networks in a little while.

In 1982, many developments took place simultaneously

- John Hopfield of Caltech proposed use of bidirectional lines in neural networks as compared to uni-directional lines being used till then. He presented this in a paper to the National Academy of Sciences.

- Reilly and Cooper used a hybrid network where each layer used a different strategy to solve problems.

- Japan announced a new Fifth Generation computer project. Fifth generation computers use neural network architecture rather than the more popular von Neumann architecture.

Researchers began working on extending the Widrow-Hoff rule to apply to multiple layers. Finally back propagating networks came into existence, which used multiple layers to ensure distribution of error.

2000s saw resurgence of ANNs

This was due to development of increased processing power of GPUs in 2020s. Faster speed ensures that the neural networks can process that much more data at faster rates.

Now let’s see how the biological neural networks are implemented as artificial neural networks. The neuron is implemented mathematically as a perceptron.

How perceptrons works

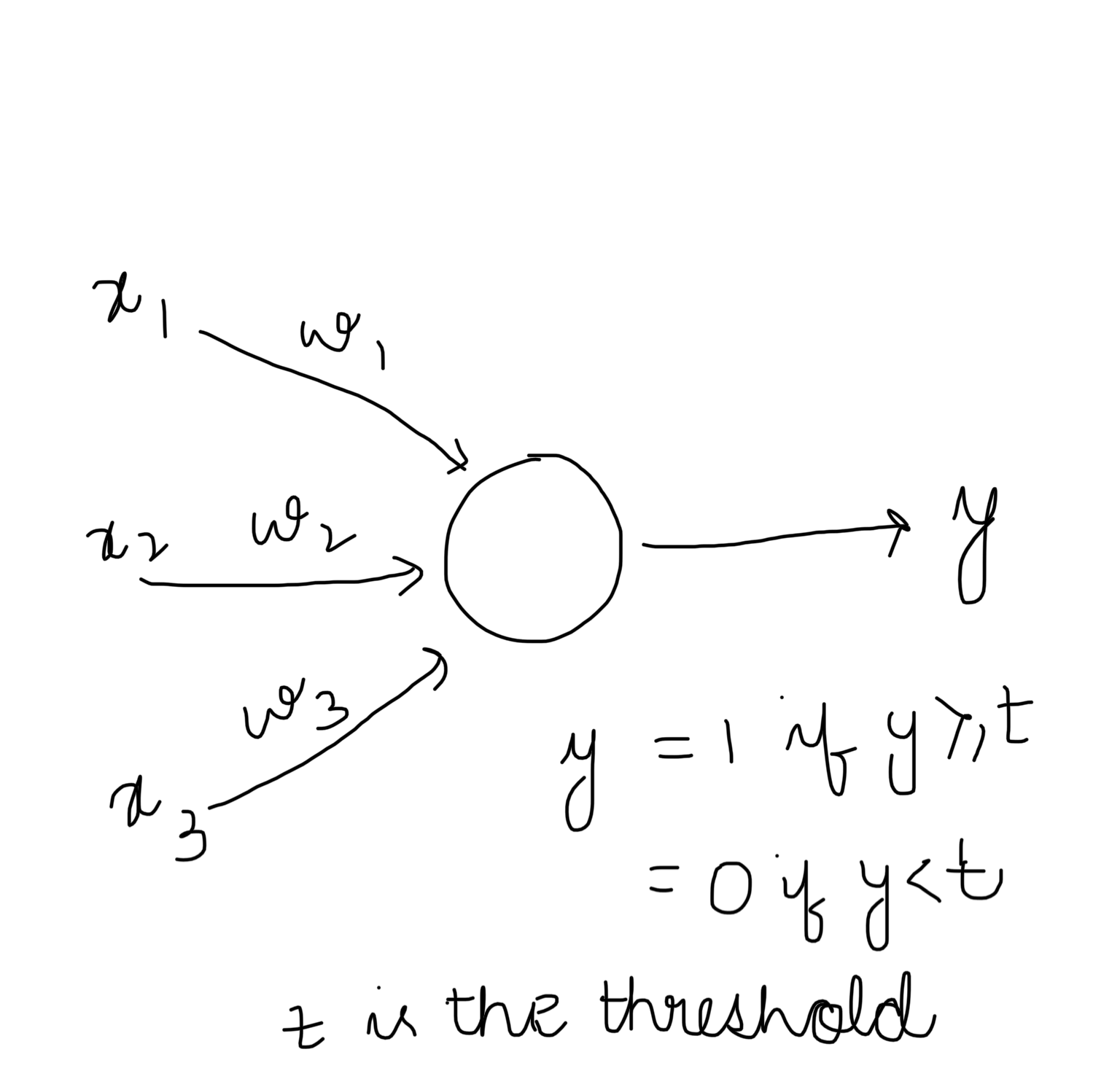

Here,

x — input

w — weight

y — output

t — threshold

As you can see, the perceptron is receiving three inputs — x1, x2 and x3. Each one has a weight associated with it — w1, w2 and w3 — and together they produce an output y.

The swimming problem

Now let us take a real life problem and see how it can be solved using an artificial neural network.

Imagine you want to go swimming in the sea but whether you can go or not depends upon many factors. Like,

- You should not have cough or cold

- The waves should not be higher than 5 m

- The weather should be sunny

- If it is cloudy but the waves are just 2 m high, you can go

Observe that there are many factors involved here — weather, wave height and your health.

These factors are the inputs and the relative importance of these factors are the weights associated with them.

Whether you will go swimming or not is the output and threshold is a value that must be reached for the output to be a success, i.e. you can go swimming in this case.

Introducing bias

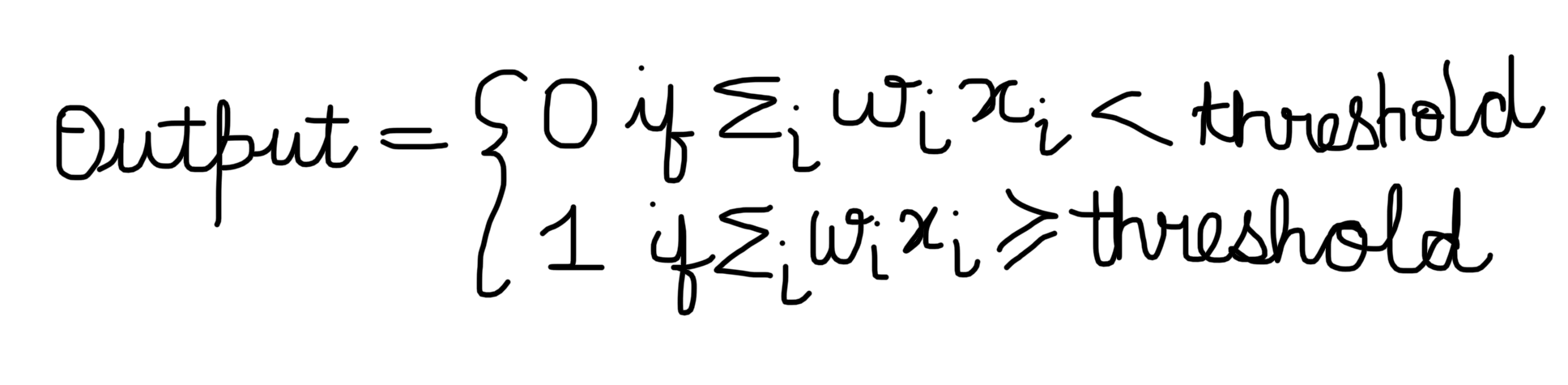

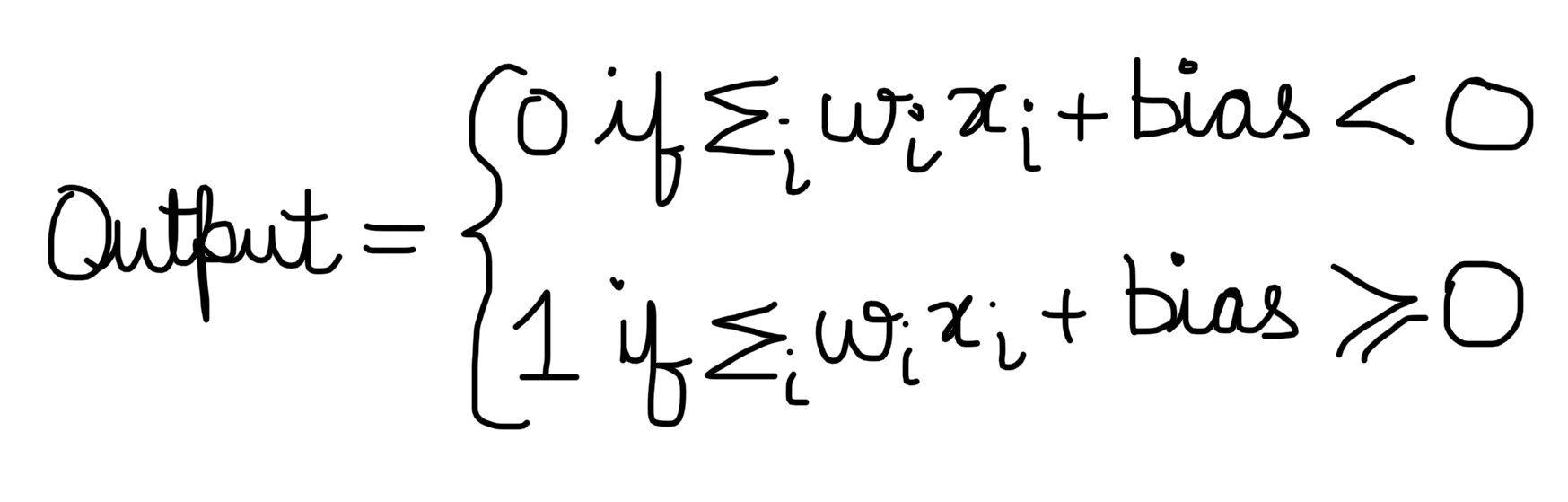

The ANN algorithm would vary the weights till the desired outcome is achieved for a given set of inputs. This would be easier if one side of the equation is zero. That is why we now introduce a new term term called bias and write our equation as follows:

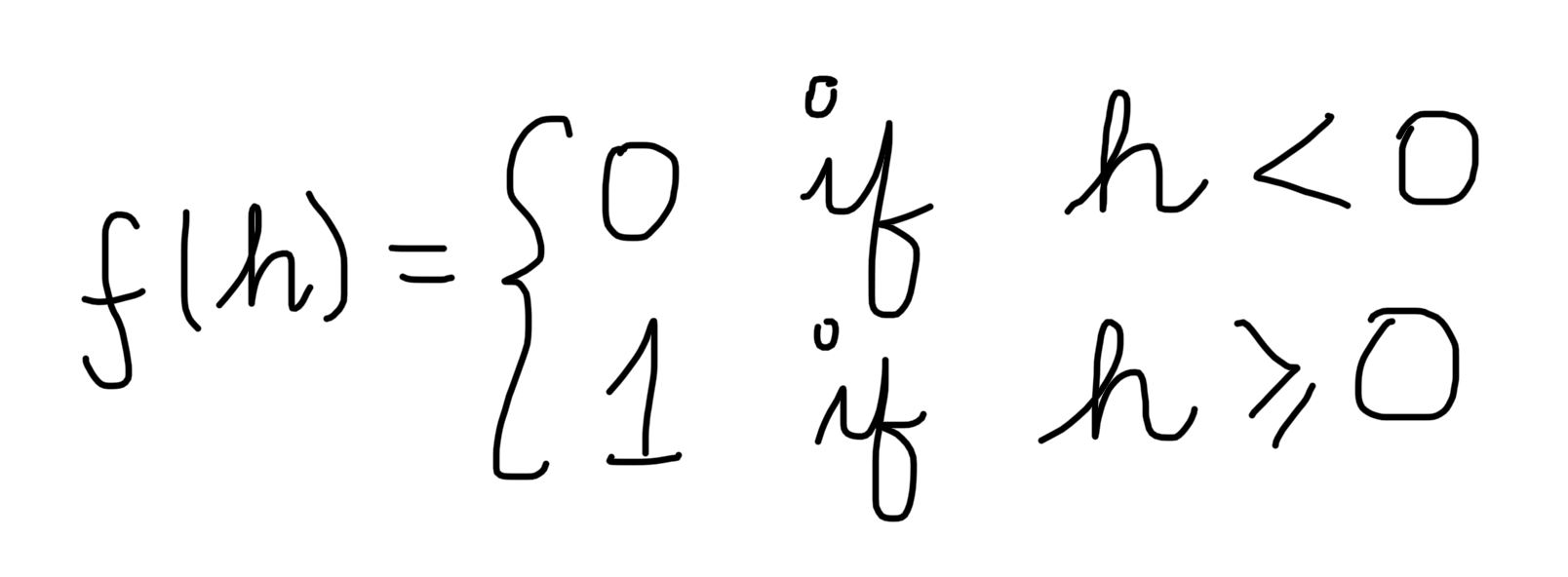

Activation function

But still there is a problem. If any of the weight is too large or too small, it can easily control the outcome. To ensure that a single weight does not control the final outcome, we run the output through a function fracture called the step function.

The most popular step function used is the Heaviside step function. It is the simplest mathematical function and used in deep neural networks.

Now our equation for the perceptron looks like this:

Besides the simplest step function, there are other types of activation functions used in neural networks, like

- Sigmoid function

- Tanh function

- Softmax

- RELU.

Sigmoid is one of the most important functions because the value of its output lies between 0 and 1. This can emulate real life situations better than the step function because in real life the outcome is rarely either 0 or 1. It is somewhere in between.

Now let us extend the real life swimming problem we started out with.

Multiple perceptrons

The weather itself can have multiple factors like temperature, humidity, solar and lunar positions, etc.

Your health can have multiple factors like body temperature, runny nose, sneezing, allergens, etc.

That is why ideally, the inputs (say, x1 and x2 ) for weather and health should be an output from a perceptron that takes those factors (like humidity, temperature, etc.) as inputs.

This leads us to a layer with multiple perceptrons, each with their own weights and inputs.

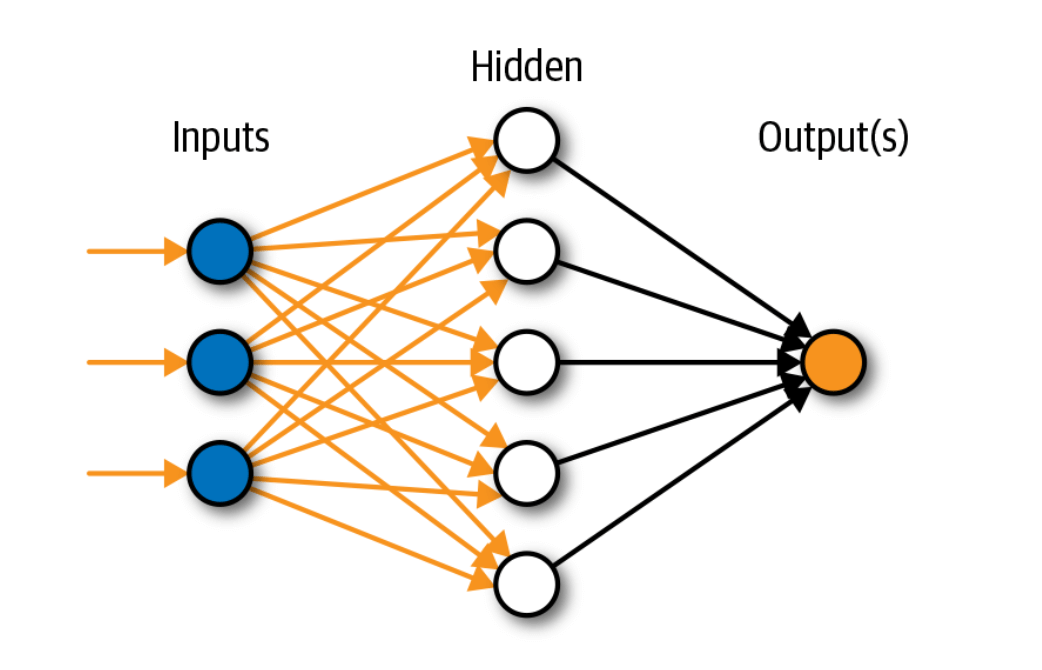

A realistic artificial neural network would look something like this:

(done How artificial neural networks function

You can identify the input and output clearly but you can see another layer in middle. This layer has no connection with the outside world and hence it is called hidden layer.

Also observe that perceptrons in the same layer are not connected to each other and each perceptron in a layer is connected to all perceptrons in the next layer. That is how non-linear problems are solved using the ANNs.

How artificial neutral networks learn

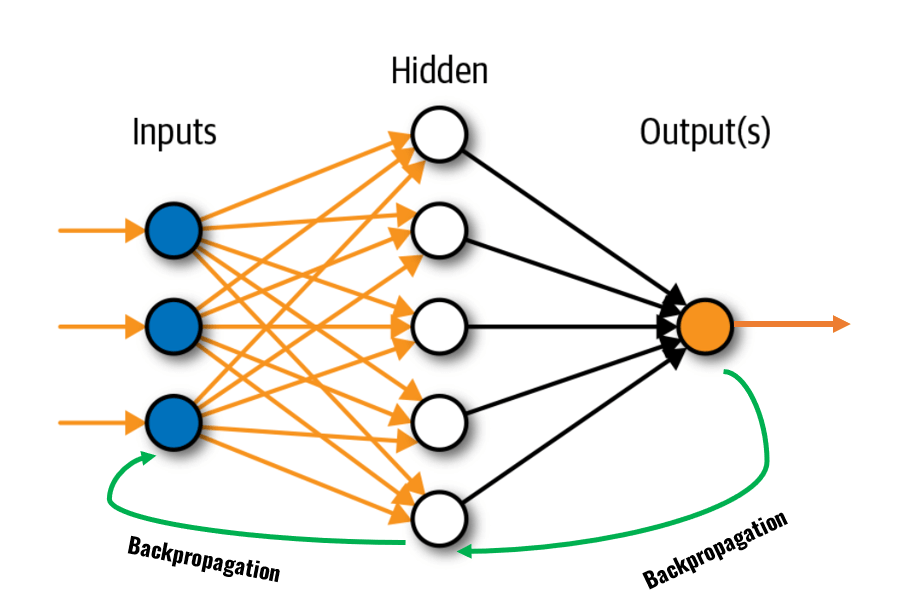

Observe that the output from each perceptron is fed to the perceptrons in the next layer. That is why ANN is called a feed forward network.

Learning in feed forward network happens through supervised learning. In supervised learning, the training dataset is in the form of input-output pair, i.e., labelled data sets. Each input-output pair is fed to the network multiple times till the network learns the relationship between the given input and output.

The system starts with random weights to achieve the desired output. The algorithm used for training the AI system keeps iterating these weights every time it is presented with the data in such a way that the output error is decreased successively.

Eventually, after running multiple cycles for the same input-output pair, the network learns the relationship between them and the desired output is achieved.

If the incorrect output is fed back as input to the perceptrons so that the neural net can adjust the weights to reach the desired outcome faster, this is called back propagation.

Here is an example of feedback. Remember the puddle example I gave earlier? Say after much thinking (which the brain does on your part), you still end up soiling your sneakers. This information is immediately fed back to the brain and this episode will be used the next time you encounter a similar situation.

Types of artificial neural networks

There are many types of neural nets but these three are the most popular:

-

-

- ANN: We just discussed this in detail, so I am not adding anything here!

- CNN: Convoluted Neural Networks have a cluster of nodes in place of a single perceptron. They are used for image processing.

- RNN: Recurrent Neural Networks use sequential data that has been collected over a period of time. They are used for language translation, natural language processing (NLP), speech recognition, etc.

-

Advantages of artificial neural networks

There are a few advantages of neural networks over the classical input-process-output (IPO) architecture of computer systems:

-

-

- ANNs can learn and model nonlinear complex relationships.

- After training, they can find relationships/patterns in new set of data.

- There is no restriction on the type of input that must be provided for the system to function properly.

- Neural nets systems are more tolerant to faults and insufficient data as compared to IPO systems.

-

Some related terms

A discussion on artificial neural networks would be incomplete without talking about a few related terms.

Machine learning

Machine learning is a branch of artificial intelligence used to develop computer systems that

- analyse training data to identify patterns

- draw their own inferences from those patterns and

- use these inferences to work using new data provided.

Machine learning systems use algorithms and statistical models that facilitate both supervised and unsupervised learning.

In a nutshell, machine learning is learning by example, like a child learns.

Unsupervised learning

In unsupervised learning, AI systems are trained using unlabeled data. So, the machines are free to draw their own conclusions from the data provided to them.

As we saw just now, in supervised learning, the output for a given set of training data is known. But in unsupervised data, since the output is not known and the system must identify the pattern by itself.

Deep learning

A neural network with two or more hidden layers is called a deep learning model.

Natural Language Processing

NLP is a branch of AI that helps computers understand the way humans speak and write. As there are so many languages and each language has so many dialects and different ways of speaking/writing, such systems need to train on huge unstructured datasets.

What next?

You now have a basic understanding about how artificial neural networks help AI systems train on available datasets. In my next blog post in the AI series, I will be talking about Computer Vision.

Or do you want me to discuss about some other topic? Tell me in comments.

If you want me to explore some part of AI project cycle or applications of AI further, do let me know. I will definitely take that up next.

Thankyou! It is very informative.